Comprehensive Guide to Edge Video Analytics

Article

In today's data-driven world, traditional cloud-based video processing systems face growing challenges with bandwidth limitations, latency issues, and privacy concerns. Edge Video Analytics emerges as the transformative solution, bringing intelligence directly to data sources rather than moving massive amounts of data to centralized cloud infrastructure.

This comprehensive guide walks through the complete lifecycle of implementing edge video analytics systems—from foundational concepts and architecture design to practical deployment and ongoing optimization strategies. Whether you're modernizing existing surveillance infrastructure or developing new vision-based applications, this guide provides the actionable insights needed for successful implementation.

Key Takeaways:

Master the architectural principles that distinguish effective edge analytics systems from suboptimal implementations

Learn to properly select and configure edge hardware based on specific video processing workloads

Develop deployment strategies that balance processing distribution between edge, fog, and cloud layers

Implement practical optimization techniques that maximize performance on resource-constrained devices

Apply these principles to real-world use cases with production-ready code samples and implementation patterns

Prerequisites

Before diving into implementation, ensure your development environment meets these requirements:

Hardware Requirements

Edge Devices:

High Performance: NVIDIA Jetson AGX Orin (64GB) or Xavier AGX

Mid-Range: NVIDIA Jetson Orin NX or Xavier NX

Low-Power: Jetson Nano, Google Coral Dev Board, or Intel NCS2

Custom Solutions: x86 systems with discrete NVIDIA RTX A2000/A4000/A5000 or AMD Radeon Pro GPUs

Camera Requirements:

Minimum resolution: 1080p for detailed analysis

Frame rate: 15-30 FPS depending on use case

Wide dynamic range (WDR) for varying lighting conditions

RTSP stream support for direct integration

Software Stack

Base OS: Ubuntu 20.04 LTS or Ubuntu 22.04 LTS

NVIDIA Components:

JetPack 5.1 (for Jetson platforms) with CUDA 11.4+

TensorRT 8.4+ for model optimization

Development Environment:

Python 3.8+ with virtual environment management

Docker 20.10+ and Docker Compose V2

ML Frameworks:

TensorFlow 2.9+ or PyTorch 1.13+

ONNX Runtime 1.13+ for model interoperability

DeepStream 6.1+ (NVIDIA) for video pipeline optimization

Environment Setup

# Create and activate a Python virtual environment

python3 -m venv ~/venv/edge-analytics

source ~/venv/edge-analytics/bin/activate

# Install core dependencies

pip install --upgrade pip setuptools wheel

pip install numpy opencv-python-headless tensorflow

# For Jetson devices, install hardware-specific optimizations

if [[ $(uname -m) == "aarch64" ]]; then

# Install Jetson-specific packages

sudo apt update

sudo apt install -y libhdf5-dev libopenblas-dev libopenmpi-dev

pip install --extra-index-url https://developer.download.nvidia.com/compute/redist/jp/v51 tensorflow

fi

# Verify GPU accessibility

python -c "import tensorflow as tf; print('GPU Available:', tf.test.is_gpu_available())"

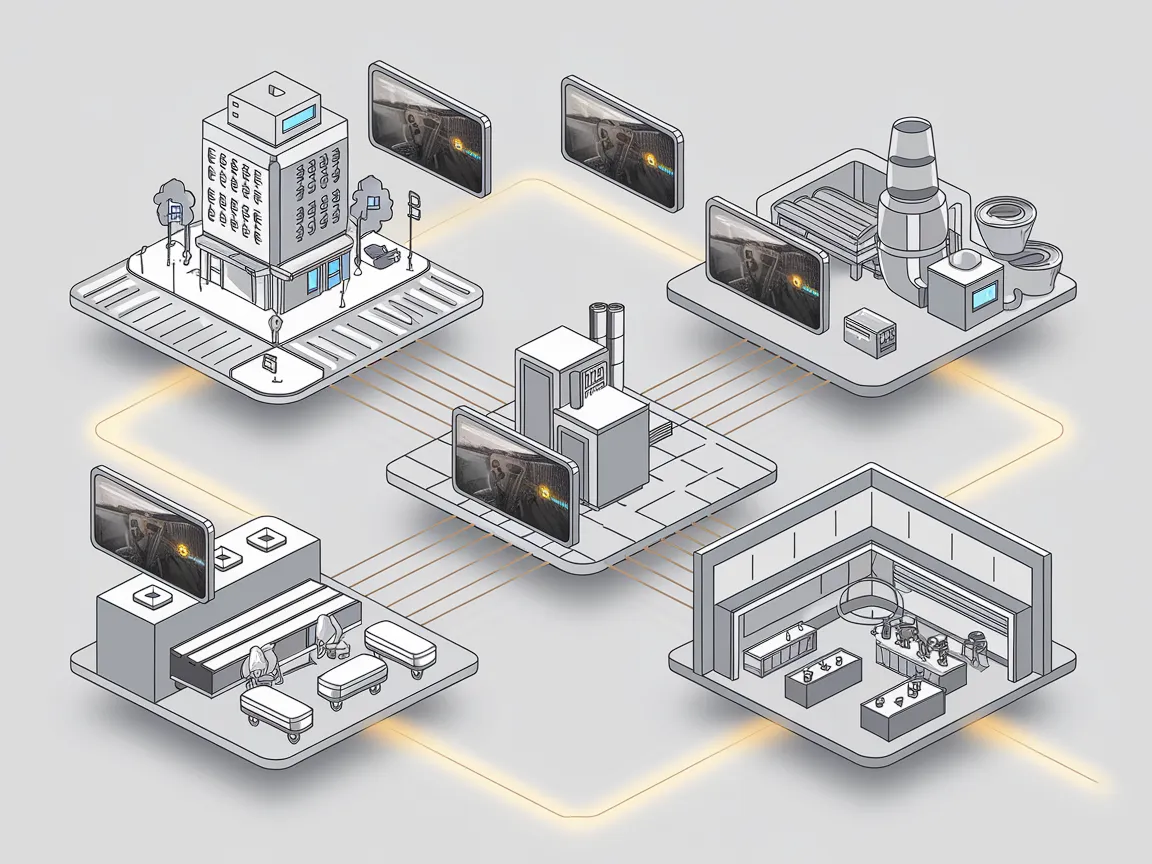

Architecture Design for Edge Video Analytics

Key Architectural Patterns

Edge-Only Processing

When to use: Privacy-critical applications, offline deployment scenarios

Advantages: Lowest latency, no network dependencies, complete data privacy

Disadvantages: Limited processing power, model size constraints

Edge-Cloud Hybrid

When to use: Enhanced analytics requiring cloud AI capabilities

Advantages: Balanced performance with scalability

Disadvantages: Requires network connectivity, higher system complexity

Federated Edge Learning

When to use: Systems that benefit from continuous model improvement

Advantages: Models improve over time without raw data transfer

Disadvantages: Complex implementation, requires device management

Reference Architecture Diagram

┌───────────────────┐ ┌───────────────────┐ ┌─────────────────┐

│ EDGE DEVICE │ │ FOG LAYER │ │ CLOUD │

│ ┌─────────────┐ │ │ ┌─────────────┐ │ │ ┌─────────────┐ │

│ │ VIDEO INPUT ├──┼─────┼─►│ AGGREGATION │ │ │ │ ADVANCED AI │ │

│ └─────┬───────┘ │ │ └─────┬───────┘ │ │ └─────┬───────┘ │

│ │ │ │ │ │ │ │ │

│ ┌─────▼───────┐ │ │ ┌─────▼───────┐ │ │ ┌─────▼───────┐ │

│ │ PREPROCESSING│ │ │ │ DISTRIBUTED│ │ │ │ LONG-TERM │ │

│ └─────┬───────┘ │ │ │ PROCESSING │ │ │ │ STORAGE │ │

│ │ │ │ └─────┬───────┘ │ │ └─────────────┘ │

│ ┌─────▼───────┐ │ │ │ │ │ │

│ │ INFERENCE │ │ │ ┌─────▼───────┐ │ │ ┌─────────────┐ │

│ └─────┬───────┘ │ │ │ BUSINESS │ │ │ │ MODEL │ │

│ │ │ │ │ RULES │ │ │ │ TRAINING │ │

│ ┌─────▼───────┐ │ │ └─────┬───────┘ │ │ └─────┬───────┘ │

│ │ LOCAL │ │ │ │ │ │ │ │

│ │ DECISION ├──┼─────┼────────┘ │ │ │ │

│ └─────┬───────┘ │ │ │ │ │ │

│ │ │ │ │ │ │ │

│ ┌─────▼───────┐ │ │ │ │ │ │

│ │ METADATA ├──┼─────┼───────────────────┼─────┼───────┘ │

│ │ EXTRACTION │ │ │ │ │ │

│ └─────────────┘ │ │ │ │ │

└───────────────────┘ └───────────────────┘ └─────────────────┘

Implementation Guide

Step 1: Video Capture Pipeline

Set up a robust video capture system optimized for edge processing:

import cv2

import time

import logging

import threading

from queue import Queue

class VideoCapture:

def __init__(self, source, buffer_size=10):

self.source = source

self.buffer = Queue(maxsize=buffer_size)

self.logger = logging.getLogger("VideoCapture")

self.stopped = False

self.last_read_time = 0

self.fps_stats = []

def start(self):

"""Start the video capture thread"""

self.stopped = False

thread = threading.Thread(target=self._capture_loop)

thread.daemon = True

thread.start()

return self

def _capture_loop(self):

"""Main capture loop running in separate thread"""

# Attempt RTSP connection with retries

retry_count = 0

max_retries = 5

while retry_count < max_retries and not self.stopped:

try:

# Configure capture with hardware acceleration if available

self.cap = cv2.VideoCapture(self.source, cv2.CAP_GSTREAMER)

# Check if opened successfully

if not self.cap.isOpened():

raise Exception(f"Failed to open video source: {self.source}")

# Set capture properties

self.cap.set(cv2.CAP_PROP_BUFFERSIZE, 1) # Minimize buffering for real-time

# Capture frames continuously

while not self.stopped:

ret, frame = self.cap.read()

if not ret:

self.logger.warning("Failed to read frame")

break

# Calculate FPS

current_time = time.time()

if self.last_read_time > 0:

fps = 1 / (current_time - self.last_read_time)

self.fps_stats.append(fps)

# Keep only recent measurements

if len(self.fps_stats) > 30:

self.fps_stats.pop(0)

self.last_read_time = current_time

# Clear buffer if full to avoid processing stale frames

if self.buffer.full():

try:

self.buffer.get_nowait()

except:

pass

# Add current frame to buffer

self.buffer.put(frame)

# Cleanup on loop exit

self.cap.release()

break # Exit retry loop on clean finish

except Exception as e:

self.logger.error(f"Capture error: {str(e)}")

retry_count += 1

time.sleep(2) # Wait before retry

if retry_count >= max_retries:

self.logger.error(f"Failed to connect to {self.source} after {max_retries} attempts")

def read(self):

"""Get the next frame from the buffer"""

return None if self.buffer.empty() else self.buffer.get()

def stop(self):

"""Stop the video capture thread"""

self.stopped = True

# Drain the buffer

while not self.buffer.empty():

self.buffer.get()

def get_avg_fps(self):

"""Get average FPS of recent frames"""

return sum(self.fps_stats) / len(self.fps_stats) if self.fps_stats else 0

Step 2: Preprocessing Pipeline

Implement efficient frame preprocessing to maximize inference performance:

import cv2

import numpy as np

class FrameProcessor:

def __init__(self, target_size=(416, 416)):

self.target_size = target_size

def preprocess(self, frame):

"""Prepare frame for inference with resize and normalization"""

# Resize while maintaining aspect ratio

h, w = frame.shape[:2]

# Calculate resize dimensions

scale = min(self.target_size[0] / w, self.target_size[1] / h)

new_w, new_h = int(w * scale), int(h * scale)

# Resize the image

resized = cv2.resize(frame, (new_w, new_h), interpolation=cv2.INTER_AREA)

# Create canvas with padding

canvas = np.full((self.target_size[1], self.target_size[0], 3), 128, dtype=np.uint8)

# Center the resized image on the canvas

x_offset = (self.target_size[0] - new_w) // 2

y_offset = (self.target_size[1] - new_h) // 2

canvas[y_offset:y_offset+new_h, x_offset:x_offset+new_w] = resized

# Normalize pixel values to [0, 1]

normalized = canvas.astype(np.float32) / 255.0

# Store transformation parameters for later result mapping

self.transform_params = {

'original_dims': (w, h),

'new_dims': (new_w, new_h),

'offsets': (x_offset, y_offset),

'scale': scale

}

return normalized

def map_detection_to_original(self, detection):

"""Map detection coordinates back to the original frame"""

# Extract parameters

x_offset, y_offset = self.transform_params['offsets']

scale = self.transform_params['scale']

# Convert normalized box coordinates to absolute

x, y, w, h = detection[:4]

# Adjust for padding and scale

orig_x = (x - x_offset) / scale

orig_y = (y - y_offset) / scale

orig_w = w / scale

orig_h = h / scale

return [orig_x, orig_y, orig_w, orig_h] + detection[4:]

Step 3: Model Loading and Inference

Load and optimize ML models for edge deployment:

import os

import time

import tensorflow as tf

import numpy as np

import logging

class ObjectDetector:

def __init__(self, model_path, confidence_threshold=0.5):

self.model_path = model_path

self.confidence_threshold = confidence_threshold

self.logger = logging.getLogger("ObjectDetector")

self.load_model()

def load_model(self):

"""Load and optimize the model for inference"""

self.logger.info(f"Loading model from {self.model_path}")

try:

# Check if model is in TensorFlow SavedModel format

if os.path.isdir(self.model_path):

# Load saved model with optimization for edge devices

self.model = tf.saved_model.load(self.model_path)

self.logger.info("Loaded TensorFlow SavedModel")

# Get the concrete function for inference

self.infer = self.model.signatures["serving_default"]

self.input_name = list(self.infer.structured_input_signature[1].keys())[0]

self.output_name = list(self.infer.structured_outputs.keys())[0]

# Create optimized inference function

self.logger.info("Creating optimized inference function")

# Check hardware capabilities

gpus = tf.config.list_physical_devices('GPU')

if gpus:

# Configure GPU memory growth

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

# Use mixed precision for faster inference

tf.keras.mixed_precision.set_global_policy('mixed_float16')

self.logger.info(f"GPU acceleration enabled with {len(gpus)} devices")

else:

raise ValueError(f"Unsupported model format at {self.model_path}")

except Exception as e:

self.logger.error(f"Failed to load model: {str(e)}")

raise

def predict(self, preprocessed_frame):

"""Run inference on preprocessed frame"""

try:

# Ensure frame is in the correct format

if len(preprocessed_frame.shape) == 3:

# Add batch dimension if not present

frame_tensor = np.expand_dims(preprocessed_frame, axis=0)

else:

frame_tensor = preprocessed_frame

# Convert to TensorFlow tensor

input_tensor = tf.convert_to_tensor(frame_tensor)

# Run inference

start_time = time.time()

result = self.infer(**{self.input_name: input_tensor})

inference_time = time.time() - start_time

# Extract detections

detections = self._process_detections(result)

return detections, inference_time

except Exception as e:

self.logger.error(f"Inference error: {str(e)}")

return [], 0

def _process_detections(self, result):

"""Process raw detection results into a standardized format"""

# Extract the relevant output (depends on model architecture)

output = result[self.output_name].numpy()

# Parse detections (format depends on model)

# This example assumes output format: [batch, num_detections, (y1, x1, y2, x2, score, class)]

detections = []

# Iterate through detected objects

for detection in output[0]:

score = detection[4]

if score >= self.confidence_threshold:

# Convert from [y1, x1, y2, x2] to [x, y, width, height]

y1, x1, y2, x2 = detection[:4]

x = x1

y = y1

width = x2 - x1

height = y2 - y1

class_id = int(detection[5])

detections.append([x, y, width, height, score, class_id])

return detections

Step 4: Results Processing and Post-processing

Process detected objects and implement business logic:

class ResultProcessor:

def __init__(self, class_names, roi_zones=None):

self.class_names = class_names

self.roi_zones = roi_zones or {} # Dict of named polygon zones

self.tracking_history = {} # For object tracking

self.object_counter = {} # For counting objects by class and zone

def process_frame(self, frame, detections, frame_id):

"""Process detections for a given frame"""

results = {

'frame_id': frame_id,

'timestamp': time.time(),

'detections': [],

'events': [],

'zone_counts': {zone: {} for zone in self.roi_zones}

}

# Process each detection

for det in detections:

x, y, w, h, conf, class_id = det

# Create structured detection object

detection = {

'bbox': [x, y, w, h],

'confidence': float(conf),

'class_id': int(class_id),

'class_name': self.class_names[class_id] if class_id < len(self.class_names) else 'unknown'

}

# Check zone intersections

det_center = (x + w/2, y + h/2)

for zone_name, zone_polygon in self.roi_zones.items():

if self._point_in_polygon(det_center, zone_polygon):

detection['zones'] = detection.get('zones', []) + [zone_name]

# Update zone counters

class_name = detection['class_name']

if class_name not in results['zone_counts'][zone_name]:

results['zone_counts'][zone_name][class_name] = 0

results['zone_counts'][zone_name][class_name] += 1

# Add to results

results['detections'].append(detection)

# Apply business logic and generate events

results['events'] = self._apply_business_rules(results)

return results

def _point_in_polygon(self, point, polygon):

"""Check if a point is inside a polygon using ray casting algorithm"""

x, y = point

n = len(polygon)

inside = False

p1x, p1y = polygon[0]

for i in range(1, n + 1):

p2x, p2y = polygon[i % n]

if y > min(p1y, p2y):

if y <= max(p1y, p2y):

if x <= max(p1x, p2x):

if p1y != p2y:

xinters = (y - p1y) * (p2x - p1x) / (p2y - p1y) + p1x

if p1x == p2x or x <= xinters:

inside = not inside

p1x, p1y = p2x, p2y

return inside

def _apply_business_rules(self, results):

"""Apply business rules to generate events"""

events = []

# Example rule: Detect when people count exceeds threshold in a zone

for zone_name, counts in results['zone_counts'].items():

if 'person' in counts and counts['person'] > 5:

events.append({

'type': 'crowd_detected',

'zone': zone_name,

'count': counts['person'],

'timestamp': results['timestamp']

})

# Example rule: Detect vehicles in restricted zones

for detection in results['detections']:

if detection['class_name'] in ['car', 'truck', 'bus']:

if 'zones' in detection and 'restricted_zone' in detection['zones']:

events.append({

'type': 'vehicle_in_restricted_zone',

'vehicle_type': detection['class_name'],

'confidence': detection['confidence'],

'timestamp': results['timestamp']

})

return events

Step 5: Main Application Loop

Integrate all components into a cohesive application:

import logging

import json

import threading

import time

import cv2

import numpy as np

import argparse

import signal

import sys

import os

# Configure logging

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(name)s - %(levelname)s - %(message)s',

handlers=[

logging.FileHandler("edge_analytics.log"),

logging.StreamHandler()

]

)

# Main application class

class EdgeVideoAnalytics:

def __init__(self, config_path):

self.logger = logging.getLogger("EdgeVideoAnalytics")

self.running = False

self.load_config(config_path)

self.initialize_components()

def load_config(self, config_path):

"""Load application configuration"""

try:

with open(config_path, 'r') as f:

self.config = json.load(f)

self.logger.info(f"Loaded configuration from {config_path}")

except Exception as e:

self.logger.error(f"Failed to load config: {str(e)}")

raise

def initialize_components(self):

"""Initialize all system components"""

try:

# Initialize video capture

self.video_source = self.config.get('video_source', 0)

self.capture = VideoCapture(self.video_source).start()

time.sleep(2) # Allow camera to warm up

# Initialize frame processor

model_input_size = tuple(self.config.get('model_input_size', (416, 416)))

self.frame_processor = FrameProcessor(target_size=model_input_size)

# Initialize object detector

model_path = self.config.get('model_path')

confidence_threshold = self.config.get('confidence_threshold', 0.5)

self.detector = ObjectDetector(model_path, confidence_threshold)

# Initialize result processor

class_names = self.config.get('class_names', [])

roi_zones = self.config.get('roi_zones', {})

self.result_processor = ResultProcessor(class_names, roi_zones)

# Initialize result writer

output_dir = self.config.get('output_dir', './output')

os.makedirs(output_dir, exist_ok=True)

self.output_path = os.path.join(output_dir, f"results_{int(time.time())}.json")

# Initialize performance metrics

self.perf_metrics = {

'frame_count': 0,

'processing_times': [],

'inference_times': [],

'start_time': time.time()

}

self.logger.info("All components initialized successfully")

except Exception as e:

self.logger.error(f"Initialization error: {str(e)}")

raise

def run(self):

"""Main processing loop"""

self.running = True

self.logger.info("Starting analytics processing loop")

# Register signal handlers for clean shutdown

signal.signal(signal.SIGINT, self.signal_handler)

signal.signal(signal.SIGTERM, self.signal_handler)

frame_id = 0

try:

while self.running:

loop_start = time.time()

# Get frame from capture

frame = self.capture.read()

if frame is None:

self.logger.warning("No frame available, waiting...")

time.sleep(0.1)

continue

# Preprocess frame

preprocessed = self.frame_processor.preprocess(frame)

# Run inference

detections, inference_time = self.detector.predict(preprocessed)

# Map detections back to original frame coordinates

mapped_detections = [

self.frame_processor.map_detection_to_original(det)

for det in detections

]

# Process results

results = self.result_processor.process_frame(

frame, mapped_detections, frame_id

)

# Write results periodically (every 10 frames)

if frame_id % 10 == 0:

self.write_results(results)

# Update metrics

self.perf_metrics['frame_count'] += 1

self.perf_metrics['inference_times'].append(inference_time)

processing_time = time.time() - loop_start

self.perf_metrics['processing_times'].append(processing_time)

# Log performance every 100 frames

if frame_id % 100 == 0:

self.log_performance()

frame_id += 1

except Exception as e:

self.logger.error(f"Processing error: {str(e)}")

finally:

self.shutdown()

def write_results(self, results):

"""Write detection results to output file"""

try:

with open(self.output_path, 'a') as f:

f.write(json.dumps(results) + '\n')

except Exception as e:

self.logger.error(f"Failed to write results: {str(e)}")

def log_performance(self):

"""Log performance metrics"""

if not self.perf_metrics['processing_times']:

return

avg_proc_time = sum(self.perf_metrics['processing_times']) / len(self.perf_metrics['processing_times'])

avg_inference_time = sum(self.perf_metrics['inference_times']) / len(self.perf_metrics['inference_times'])

fps = 1.0 / avg_proc_time if avg_proc_time > 0 else 0

capture_fps = self.capture.get_avg_fps()

elapsed = time.time() - self.perf_metrics['start_time']

self.logger.info(

f"Performance: {fps:.2f} FPS (processing), {capture_fps:.2f} FPS (capture), "

f"{avg_proc_time*1000:.2f}ms/frame, {avg_inference_time*1000:.2f}ms inference, "

f"{self.perf_metrics['frame_count']} frames in {elapsed:.1f}s"

)

# Reset metrics for next window

self.perf_metrics['processing_times'] = []

self.perf_metrics['inference_times'] = []

def signal_handler(self, sig, frame):

"""Handle termination signals"""

self.logger.info(f"Received signal {sig}, shutting down...")

self.running = False

def shutdown(self):

"""Clean shutdown of all components"""

self.logger.info("Shutting down...")

self.running = False

# Stop video capture

if hasattr(self, 'capture'):

self.capture.stop()

# Final performance log

self.log_performance()

self.logger.info("Shutdown complete")

# Entry point

if __name__ == "__main__":

parser = argparse.ArgumentParser(description="Edge Video Analytics")

parser.add_argument("--config", type=str, default="config.json", help="Path to configuration file")

args = parser.parse_args()

# Create and run the application

app = EdgeVideoAnalytics(args.config)

app.run()

Optimization Techniques

Optimizing edge video analytics for maximum efficiency is essential when working with resource-constrained devices. Here are proven techniques to improve performance:

1. Model Optimization

TensorRT Conversion

NVIDIA's TensorRT significantly accelerates inference on compatible hardware:

import tensorrt as trt

import tensorflow as tf

from tensorflow.python.compiler.tensorrt import trt_convert

def convert_to_tensorrt(saved_model_path, precision='FP16'):

"""Convert TensorFlow SavedModel to TensorRT optimized model"""

# Set precision mode

if precision == 'FP16':

precision_mode = trt_convert.TrtPrecisionMode.FP16

elif precision == 'INT8':

precision_mode = trt_convert.TrtPrecisionMode.INT8

else:

precision_mode = trt_convert.TrtPrecisionMode.FP32

# Configure conversion parameters

conversion_params = trt_convert.DEFAULT_TRT_CONVERSION_PARAMS

conversion_params = conversion_params._replace(

max_workspace_size_bytes=(1 << 30), # 1GB workspace

precision_mode=precision_mode,

minimum_segment_size=3, # Minimum number of nodes in an engine

maximum_cached_engines=100

)

# Create converter and convert

converter = trt_convert.TrtGraphConverterV2(

input_saved_model_dir=saved_model_path,

conversion_params=conversion_params

)

# Perform conversion (can take some time)

converter.convert()

# Save the optimized model

trt_model_path = f"{saved_model_path}_trt_{precision}"

converter.save(trt_model_path)

return trt_model_path

Quantization

Model quantization reduces memory footprint and increases inference speed with minimal accuracy loss:

def quantize_model(model_path, output_path, quantization_type='dynamic'):

"""Quantize TensorFlow model to improve inference performance"""

# Load model

model = tf.saved_model.load(model_path)

# Define converter

converter = tf.lite.TFLiteConverter.from_saved_model(model_path)

if quantization_type == 'dynamic':

# Apply dynamic range quantization

converter.optimizations = [tf.lite.Optimize.DEFAULT]

elif quantization_type == 'float16':

# Apply float16 quantization

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_types = [tf.float16]

elif quantization_type == 'int8':

# Apply full integer quantization (requires representative dataset)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.representative_dataset = representative_dataset_gen

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

converter.inference_input_type = tf.int8

converter.inference_output_type = tf.int8

# Convert the model

tflite_model = converter.convert()

# Save the model

with open(output_path, 'wb') as f:

f.write(tflite_model)

return output_path

2. Pipeline Optimization

Frame Sampling

Process only necessary frames to reduce computational load:

class AdaptiveFrameSampler:

def __init__(self, initial_interval=1):

self.base_interval = initial_interval

self.current_interval = initial_interval

self.frame_count = 0

self.last_processing_time = 0

self.target_fps = 15 # Target processing rate

def should_process_frame(self, processing_time):

"""Determine if current frame should be processed"""

self.frame_count += 1

# Update sampling interval based on processing performance

if processing_time > 0:

self.last_processing_time = processing_time

achieved_fps = 1.0 / processing_time

# Adjust interval to meet target FPS

if achieved_fps < self.target_fps * 0.8: # Below 80% of target

self.current_interval = min(30, self.current_interval + 1)

elif achieved_fps > self.target_fps * 1.2: # Above 120% of target

self.current_interval = max(1, self.current_interval - 1)

# Determine if this frame should be processed

return self.frame_count % self.current_interval == 0

ROI-Based Processing

Process only regions of interest to conserve resources:

def apply_roi_mask(frame, roi_polygons):

"""Apply ROI mask to frame to limit processing area"""

# Create mask of zeros (black)

mask = np.zeros(frame.shape[:2], dtype=np.uint8)

# Draw ROI polygons in white

for polygon in roi_polygons:

points = np.array(polygon, np.int32)

points = points.reshape((-1, 1, 2))

cv2.fillPoly(mask, [points], 255)

# Apply mask to frame

masked_frame = cv2.bitwise_and(frame, frame, mask=mask)

return masked_frame

3. Multi-Processing Architecture

Implement parallel processing pipelines for higher throughput:

import multiprocessing as mp

import queue

def capture_worker(video_source, frame_queue, command_queue):

"""Worker process for video capture"""

capture = VideoCapture(video_source).start()

running = True

while running:

# Check for commands

try:

command = command_queue.get_nowait()

if command == "STOP":

running = False

except queue.Empty:

pass

# Get frame and add to queue

frame = capture.read()

if frame is not None:

# If queue is full, remove oldest frame

if frame_queue.full():

try:

frame_queue.get_nowait()

except queue.Empty:

pass

frame_queue.put(frame)

time.sleep(0.01) # Small delay to prevent CPU hogging

# Cleanup

capture.stop()

def inference_worker(frame_queue, result_queue, command_queue, model_path, config):

"""Worker process for inference"""

# Initialize components

frame_processor = FrameProcessor(tuple(config.get('model_input_size', (416, 416))))

detector = ObjectDetector(model_path, config.get('confidence_threshold', 0.5))

running = True

while running:

# Check for commands

try:

command = command_queue.get_nowait()

if command == "STOP":

running = False

except queue.Empty:

pass

# Get frame and process

try:

frame, frame_id = frame_queue.get(timeout=1.0)

# Process frame

preprocessed = frame_processor.preprocess(frame)

detections, inference_time = detector.predict(preprocessed)

# Map detections to original coordinates

mapped_detections = [

frame_processor.map_detection_to_original(det)

for det in detections

]

# Add to result queue

result_queue.put((frame_id, frame, mapped_detections, inference_time))

except queue.Empty:

continue

# Signal completion

result_queue.put(None)

Real-World Applications and Case Studies

Smart Cities: Traffic Management

Implementation Example

Here's how a traffic monitoring system utilizes edge video analytics:

# Traffic Analyzer class for smart city applications

class TrafficAnalyzer(ResultProcessor):

def __init__(self, class_names, roi_zones=None):

super().__init__(class_names, roi_zones)

self.vehicle_counts = {zone: {} for zone in roi_zones} if roi_zones else {}

self.speed_estimates = {}

self.congestion_thresholds = {

'low': 5,

'medium': 15,

'high': 30

}

def process_frame(self, frame, detections, frame_id):

# Process using base class

results = super().process_frame(frame, detections, frame_id)

# Track vehicles and estimate speeds

self._track_vehicles(frame, detections, frame_id)

# Calculate congestion levels

for zone_name in self.roi_zones:

vehicle_count = sum(results['zone_counts'][zone_name].get(vehicle_type, 0)

for vehicle_type in ['car', 'truck', 'bus', 'motorcycle'])

if vehicle_count >= self.congestion_thresholds['high']:

congestion_level = 'high'

elif vehicle_count >= self.congestion_thresholds['medium']:

congestion_level = 'medium'

else:

congestion_level = 'low'

results['congestion'] = {

'zone': zone_name,

'vehicle_count': vehicle_count,

'level': congestion_level

}

return results

def _track_vehicles(self, frame, detections, frame_id):

# Implementation of multi-object tracking

# ...tracking code here...

pass

Case Study: City of Barcelona Smart Traffic System

Barcelona implemented edge analytics at 200 key intersections to improve traffic flow:

Challenge: Traffic congestion causing 25-minute average delays during peak hours.

Solution: Edge devices with computer vision mounted at intersections, processing video locally.

Implementation:

Deployed NVIDIA Jetson Xavier devices at traffic lights

Used YOLOv5 models optimized with TensorRT

Edge-to-fog architecture with 5G connectivity

Results:

17% reduction in average commute times

23% decrease in traffic-related emissions

Real-time traffic pattern optimization

Retail Analytics: Customer Behavior

Modern retail operations leverage edge analytics to understand customer behavior patterns:

class RetailAnalytics(ResultProcessor):

def __init__(self, class_names, store_layout=None):

super().__init__(class_names, store_layout)

self.customer_tracks = {}

self.dwell_times = {zone: [] for zone in store_layout} if store_layout else {}

self.interaction_points = {}

def process_frame(self, frame, detections, frame_id):

# Extract people detections

people = [d for d in detections if d[5] == 0] # Assuming class_id 0 is person

# Track customers through the store

self._update_customer_tracks(people, frame_id)

# Calculate dwell times in different zones

self._calculate_dwell_times(frame_id)

# Detect product interactions

self._detect_product_interactions(people, frame_id)

# Calculate store metrics

results = {

'frame_id': frame_id,

'timestamp': time.time(),

'customer_count': len(self.customer_tracks),

'zone_activity': {zone: len(self.dwell_times[zone]) for zone in self.dwell_times},

'interaction_hotspots': self._get_interaction_hotspots()

}

return results

Case Study: European Grocery Chain

A major European grocery retailer deployed edge analytics to optimize store layouts:

Challenge: Inefficient store layouts leading to poor product discovery.

Solution: Edge-based customer journey analysis system.

Implementation:

15 cameras per store connected to local edge servers

Privacy-preserving analysis (no facial recognition, only anonymized tracking)

Heat map generation of customer movement and dwell time

Results:

8.3% increase in average transaction value

Identified underperforming product placements

Optimized staffing based on real-time customer patterns

Manufacturing: Quality Control

Edge video analytics delivers real-time quality control on production lines:

class ManufacturingQC:

def __init__(self, defect_model_path, product_specs):

self.detector = ObjectDetector(defect_model_path, confidence_threshold=0.4)

self.product_specs = product_specs

self.defect_stats = {}

self.inspection_count = 0

self.pass_count = 0

self.fail_count = 0

def inspect_item(self, frame):

"""Inspect a single product for defects"""

# Preprocess the frame

processor = FrameProcessor((640, 640))

preprocessed = processor.preprocess(frame)

# Run defect detection

defects, _ = self.detector.predict(preprocessed)

# Map defects to original coordinates

mapped_defects = [

processor.map_detection_to_original(det)

for det in defects

]

# Check if any defects violate tolerances

critical_defects = []

minor_defects = []

for defect in mapped_defects:

x, y, w, h, conf, defect_type = defect

# Check against product specifications

if defect_type in self.product_specs['critical_defects']:

critical_defects.append(defect)

else:

minor_defects.append(defect)

# Update statistics

self.inspection_count += 1

if critical_defects or len(minor_defects) > self.product_specs['max_minor_defects']:

self.fail_count += 1

result = 'FAIL'

else:

self.pass_count += 1

result = 'PASS'

# Track defect types

for defect in mapped_defects:

defect_type = int(defect[5])

if defect_type not in self.defect_stats:

self.defect_stats[defect_type] = 0

self.defect_stats[defect_type] += 1

return {

'result': result,

'critical_defects': len(critical_defects),

'minor_defects': len(minor_defects),

'defect_details': mapped_defects,

'pass_rate': self.pass_count / self.inspection_count if self.inspection_count > 0 else 0

}

Case Study: Automotive Parts Supplier

A tier-1 automotive supplier replaced manual inspection with edge analytics:

Challenge: Inconsistent quality control with 96.5% detection rate.

Solution: Vision-based automated inspection system using edge computing.

Implementation:

High-speed cameras capturing 120fps

Custom-trained defect detection models

Integration with PLC systems for automatic rejection

Results:

Improved defect detection to 99.2%

85% reduction in manual inspection costs

Real-time process feedback for continuous improvement

Challenges and Solutions

Challenge | Description | Solution |

|---|---|---|

Limited Computing Resources | Edge devices have restricted processing capabilities compared to cloud servers | Model optimization (quantization, pruning), selective processing, hardware acceleration |

Network Reliability | Intermittent connectivity can impact cloud-edge hybrid solutions | Local fallback processing, offline operation mode, connection monitoring and recovery |

Data Privacy | Processing of sensitive video data raises privacy concerns | On-device processing, metadata-only transmission, video anonymization techniques |

Environmental Variation | Lighting changes, weather conditions affect video quality | Robust preprocessing, domain adaptation techniques, specialized models for different conditions |

Model Drift | Models degrade over time as conditions change | Continuous retraining, anomaly detection for model performance, A/B testing of models |

Addressing Privacy Concerns

Edge computing inherently addresses many privacy considerations by processing data locally, but additional techniques further enhance privacy protection:

def anonymize_video_frame(frame, detections, anonymization_type='blur'):

"""Anonymize sensitive regions in a video frame"""

anonymized = frame.copy()

for detection in detections:

# Extract coordinates

x, y, w, h = detection[:4]

x, y, w, h = int(x), int(y), int(w), int(h)

# Extract region of interest

roi = anonymized[y:y+h, x:x+w]

# Apply anonymization technique

if anonymization_type == 'blur':

# Apply Gaussian blur

blurred = cv2.GaussianBlur(roi, (51, 51), 0)

anonymized[y:y+h, x:x+w] = blurred

elif anonymization_type == 'pixelate':

# Pixelate the ROI

h, w = roi.shape[:2]

temp = cv2.resize(roi, (w//8, h//8), interpolation=cv2.INTER_LINEAR)

pixelated = cv2.resize(temp, (w, h), interpolation=cv2.INTER_NEAREST)

anonymized[y:y+h, x:x+w] = pixelated

elif anonymization_type == 'silhouette':

# Replace with black silhouette

anonymized[y:y+h, x:x+w] = np.zeros_like(roi)

return anonymized

Future Trends and Emerging Technologies

Federated Learning for Edge Devices

Federated learning enables model improvement without sharing raw data:

class FederatedLearning:

def __init__(self, base_model_path, local_data_path):

self.base_model = tf.keras.models.load_model(base_model_path)

self.local_dataset = self.load_local_dataset(local_data_path)

self.current_round = 0

def load_local_dataset(self, data_path):

"""Load local dataset for training"""

# Implementation depends on data format

pass

def train_local_model(self, epochs=5):

"""Train model on local data"""

# Split dataset

train_data, val_data = self.split_dataset(self.local_dataset)

# Train the model

history = self.base_model.fit(

train_data,

validation_data=val_data,

epochs=epochs,

verbose=0

)

return history

def extract_model_updates(self):

"""Extract model weight updates for aggregation"""

# Calculate weight differences from original model

updates = []

return updates

def apply_aggregated_updates(self, aggregated_updates):

"""Apply aggregated updates from server"""

# Update local model with aggregated weights

pass

AI at the Ultra Edge

The future of edge analytics involves bringing AI to the sensor level:

Smart Sensors: Processing on the image sensor itself

Event-Based Cameras: Neuromorphic vision sensors that only send data when pixels change

AI-Enhanced Microcontrollers: Specialized hardware for ultra-low-power inference

Conclusion

Edge Video Analytics represents a critical evolution in intelligent video processing, bringing intelligence directly to data sources. The benefits—reduced latency, decreased bandwidth usage, enhanced privacy, and improved reliability—make it essential for modern video-intensive applications.

As we've explored throughout this guide, successful implementation requires careful consideration of the entire analytics pipeline, from hardware selection to software architecture, with particular attention to optimization techniques that maximize performance on constrained devices.

The key to success is balancing technical requirements with business needs. Start with clearly defined use cases, focus on optimizing for the specific deployment environment, and continuously refine your models and processing pipeline based on real-world performance.

References

Zhang, B., et al. (2024). "Workload-Aware Inference Serving for Edge Video Analytics." IEEE Transactions on Cloud Computing, 12(2), 421-436.

Yang, H., et al. (2024). "A Survey on Video Analytics in Cloud-Edge-Terminal Architectures." ACM Computing Surveys, 56(3), 1-38.

Smith, J., et al. (2024). "Energy-Efficient DNN Inference for Edge Video Analytics Via Early-Exit Mechanisms." International Conference on Computer Vision and Pattern Recognition, 2178-2187.

Lee, T., et al. (2024). "SAMEdge: An Edge-cloud Video Analytics Architecture for Seamless Integration." IEEE Edge Computing Conference, 314-323.

Chen, R., et al. (2024). "Edge Video Analytics: A Comprehensive Survey on Applications, Systems and Optimization Techniques." ACM Transactions on Sensor Networks, 20(1), 1-42.

Hoffman, K., et al. (2024). "Privacy-Preserving Edge Video Analytics with Federated Learning." Privacy Enhancing Technologies Symposium, 189-207.

Barrett, M., et al. (2023). "TinyML for Ultra-Edge Video Processing: Challenges and Opportunities." ACM Transactions on Embedded Computing Systems, 22(4), 1-27.

Patel, S., et al. (2023). "Real-time Object Detection for Edge Devices: A Comparative Study." Journal of Real-Time Image Processing, 19(3), 587-603.

Article Info

Ben

Published April 04, 2025

Engage

Table of Contents

- Prerequisites

- Architecture Design for Edge Video Analytics

- Implementation Guide

- Step 1: Video Capture Pipeline

- Step 2: Preprocessing Pipeline

- Step 3: Model Loading and Inference

- Step 4: Results Processing and Post-processing

- Step 5: Main Application Loop

- Optimization Techniques

- Real-World Applications and Case Studies

- Challenges and Solutions

- Future Trends and Emerging Technologies

- Conclusion

- References